Optimize Federal Data for Performance, Cost, and Mission Readiness

Federal agencies generate more data than ever. But without intelligent optimization, data sprawl drives rising costs, operational friction, and delayed insight. Hitachi helps agencies automate data lifecycle decisions, reduce storage waste, and optimize data pipelines without sacrificing access, governance, or security.

The Challenge

Federal Data Optimization Problem

Federal agencies face accelerating data growth across mission systems, business applications, analytics platforms, and emerging AI workloads. Without intelligent optimization, data environments become costly, inefficient, and increasingly difficult to govern.

Manual lifecycle policies, siloed tools, and limited visibility force agencies to over-provision storage, retain unnecessary data, and rely on brittle scripts to move and manage information. As data volumes grow, so do infrastructure costs, performance bottlenecks, and operational risk.

Common challenges agencies face include:

-Exploding storage costs driven by redundant, inactive, or poorly tiered data

-Manual ETL and lifecycle processes that slow analytics and increase operational risk

-Limited visibility into where data lives, how it’s used, and what can be optimized safely

The Solution

How Hitachi Optimizes Data

Hitachi delivers policy-driven data optimization that balances cost, performance, and governance across the full data lifecycle without forcing agencies into cloud-only or vendor-locked architectures. By combining data integration, metadata intelligence, and automated lifecycle management, agencies gain precise control over how data is ingested, transformed, stored, tiered, and retained.

Unlike point tools that optimize only storage or pipelines, Hitachi unifies data movement, quality, metadata, and lifecycle policies into a single approach. Optimization decisions are driven by usage patterns, business value, compliance, and performance needs, allowing agencies to continuously optimize their data environments while maintaining auditability, accessibility, and mission readiness.

Why Hitachi for Data Optimization

-Policy-Driven Automation – Eliminate manual scripts with rules-based lifecycle and pipeline optimization

-Hybrid-Ready by Design – Optimize data across on-prem, secure, disconnected, and hybrid environments

-Governance-Aligned Optimization – Reduce cost without compromising retention, lineage, or compliance

-Future-Ready for AI & Analytics – Prepare data pipelines and storage tiers for AI-scale workloads

Products That Power Data Optimization

Federal Use Cases

Large-scale data lifecycle optimization and tiering

ETL automation for enterprise data warehouses

AI and analytics pipeline acceleration

Storage cost reduction without loss of data access

Cloud-migration readiness and optimization assessments

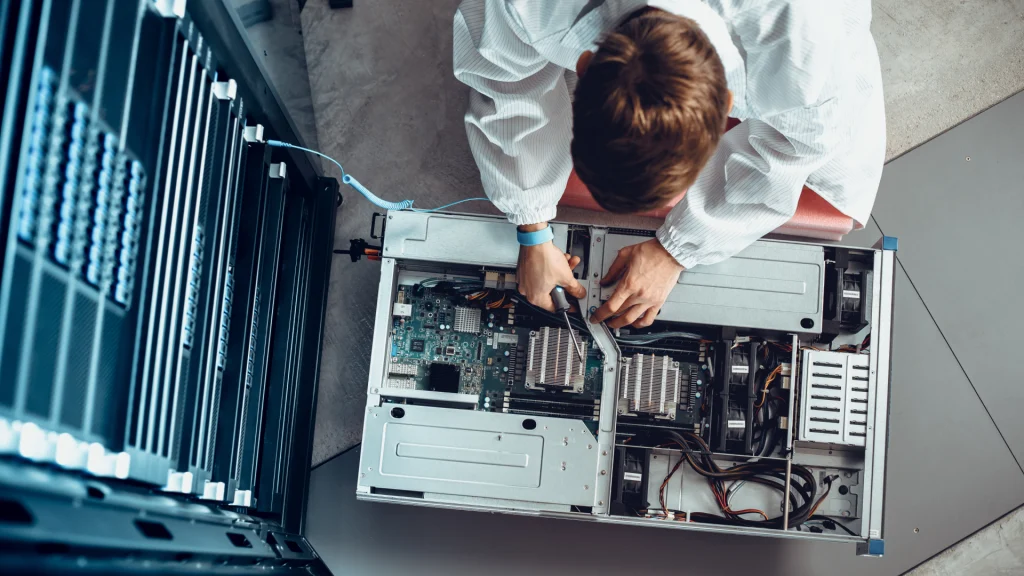

U.S. Legislative Agency — Data Pipeline Optimization & Governance

The Challenge

A U.S. Legislative Agency needed to modernize and automate ETL processes supporting an enterprise data warehouse used by multiple mission and business applications. Manual processes limited scalability, slowed delivery, and constrained governance.

The Solution

Hitachi helped automate ETL pipelines and implement a data governance foundation capable of supporting future optimization, quality, and insight initiatives, without disrupting existing operations.

Outcomes

-Automated formerly manual ETL workflows across multiple enterprise applications

-Improved data governance, quality, and trust

-Faster onboarding and training for new developers

-Accelerated time-to-value for new use cases

-A scalable foundation for future optimization and analytics programs